The Economic Implications of AI: How Should We Regulate Artificial Intelligence to Ensure Ethicality?

December 15, 2021

Artificial Intelligence (AI) plays a paramount role in our everyday lives. Everyday examples of AI include maps and navigation algorithms in our GPSs, facial detection, recognition from our smartphones, autocorrect in our texting apps, and social media advertising content pre-selected for us by an algorithm. It is strange to think of a world without familiar technology; however, AI technology may be posing threats to all of humankind – with white-collar workers being the most susceptible social group.

In 2019, Elon Musk spoke at an AI summit in China and mentioned that “AI will be the best or worst thing ever for humanity.” Some argue that humans should embrace AI since it may bring about creative, productive, and societal advancement due to its algorithmic efficiency. Musk also delivered the infamous quote at the same summit, “AI means love in Chinese.” As the CEO of both juggernaut companies, Tesla and SpaceX, Musk’s apparent enthusiasm points to the tech world’s vivacity for AI and its development. On the other end of the spectrum, some are worried that AI may threaten the human race and control society, like it does in the popular media depictions, The Matrix or Terminator.

Public opinion regarding AI is mixed. According to a 2020 study by Pew Research, “A median of about half (53%) say the development of artificial intelligence, or the use of computer systems designed to imitate human behaviors, has been a good thing for society, while 33% say it has been a bad thing.” As previously mentioned, disapproval of AI stems from fear of machine learning autonomy without human control. However, Dokyun Lee, Assistant Professor of Business Analytics at CMU’s Tepper School of Business, reassures that “machine learning works best as a tool, and that the art of human interpretation and reasoning cannot be replaced by artificial intelligence.”

How can we define AI autonomy as “good” or “bad”? Should we define it by sorting and classifying AI’s instructions by fundamental human values and ethics? Can we impose ethics or morality on AI programming? The debate of what is considered good versus evil means different things to different people. Simply put, AI follows a set of instructions inputted by a programmer. Therefore, programmers will have to brainstorm and set regulations to limit AI to ensure it completes its purpose and does not achieve total acting autonomy.

Stephen Hawking, a proponent for AI regulation, referred to AI as “powerful autonomous weapons, or new ways for the few to oppress the many.” While AI’s efficiency may cause the disappearance of several professions from different income levels and categories, the social group most affected is low-income labor workers.

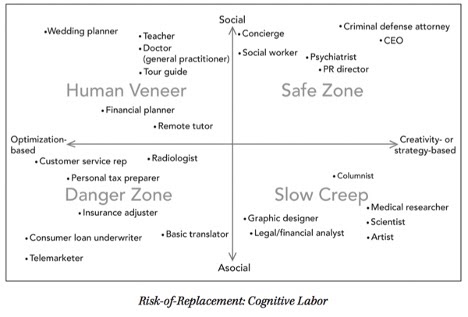

As seen in the Risk-of-Replacement: Cognitive Labor graph above, specific jobs are more at-risk than others. Since AI has trouble with highly-strategic or creative tasks with unclear goals or highly-social tasks that require a nuanced understanding of a person’s emotions, AI threatens different jobs at different paces. However, the underlying similarity is that the social group cultivating and implementing AI are high-ranking tech company officials with a high income and net worth. Thus, we should think about the consequences of AI. Whom does it affect the most? Why should we instate policies to regulate AI? What kind of policies should that entail?

Sources:

https://www.youtube.com/watch?v=zqoXSbnNMjM

https://www.bbc.com/news/technology-30290540

https://www.cmu.edu/tepper/news/stories/2019/august/dokyun-lee-machine-learning.html